Ethical Concerns in Artificial Intelligence Explained

- 0

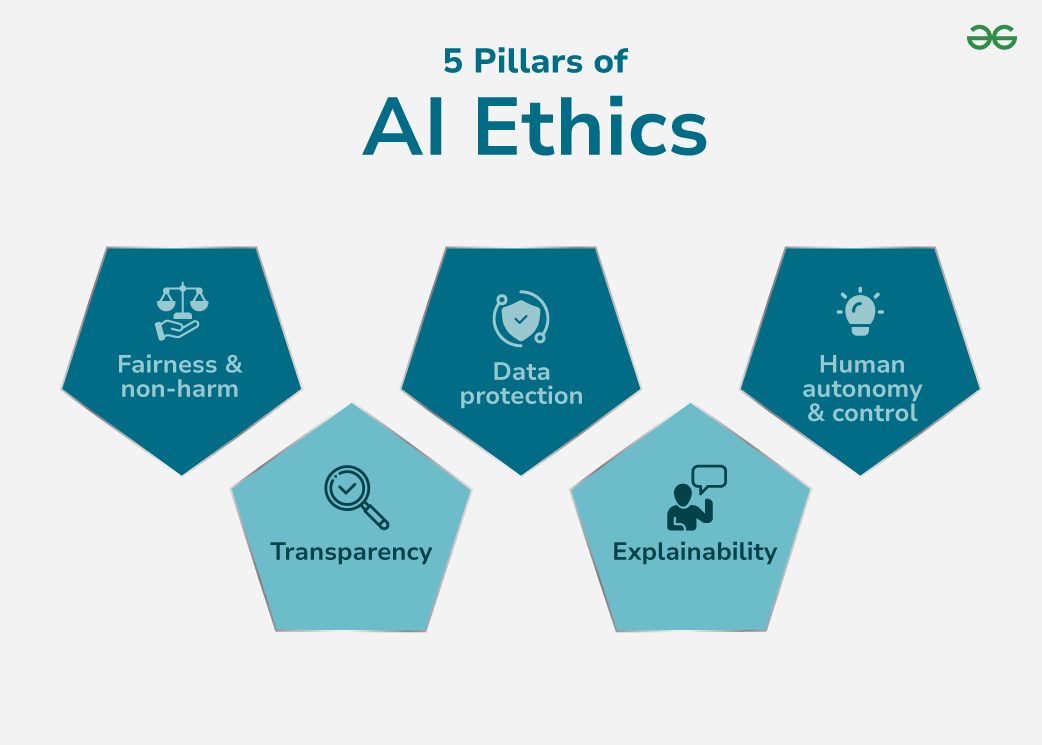

In recent years, artificial intelligence (AI) has made significant advancements and has become an integral part of our daily lives. From virtual assistants to self-driving cars, AI technology continues to revolutionize various industries. However, with great power comes great responsibility, and the ethical implications of AI have raised significant concerns.

Transparency and Accountability

One of the primary ethical concerns surrounding AI is the lack of transparency and accountability. AI algorithms are often complex and opaque, making it challenging to understand how they make decisions. This lack of transparency can lead to biased outcomes and discrimination. It is crucial for developers to ensure that AI systems are transparent and can be held accountable for their actions.

Privacy and Data Security

AI systems rely on vast amounts of data to operate effectively. While this data is essential for training algorithms, it also raises significant privacy concerns. With AI collecting and analyzing personal information, there is a risk of data breaches and unauthorized use of data. It is crucial for organizations to prioritize data security and implement robust privacy measures to protect user information.

Bias and Discrimination

AI algorithms are only as unbiased as the data they are trained on. If the data used to train AI systems is biased, the algorithms will inevitably produce biased outcomes. This can lead to discrimination in various areas, such as hiring decisions, loan approvals, and criminal justice. To mitigate bias and discrimination, developers must carefully select and preprocess data to ensure fairness and equity in AI systems.

Autonomy and Control

As AI technology becomes more advanced, there is a growing concern about the autonomy and control of AI systems. There is a fear that AI could surpass human intelligence and decision-making capabilities, raising questions about who should be ultimately responsible for the actions of AI. It is essential for developers to establish clear guidelines and boundaries for AI systems to ensure that they remain under human control.

Ethical Decision-Making

AI systems are increasingly being used to make ethical decisions, such as in healthcare and autonomous vehicles. However, the ability of AI to make ethical judgments raises significant concerns about the moral implications of these decisions. It is essential for developers to program AI with ethical guidelines and principles to ensure that their decisions align with societal values and norms.

Conclusion

As AI technology continues to advance, it is crucial to address the ethical concerns that come with its development and implementation. Transparency, accountability, privacy, bias, autonomy, and ethical decision-making are just a few of the key issues that need to be considered when designing and deploying AI systems. By prioritizing ethical considerations, developers can ensure that AI technology benefits society while minimizing potential harms.

In conclusion, ethical concerns in artificial intelligence are complex and multifaceted, requiring a collaborative effort from stakeholders across various industries to address them effectively.